The Role That Space Exploration Plays in Creating Opportunities for Following Generations

Through the ages, humanity’s obsession with the stars has been a driving force behind creativity, discovery, and imagination. Beginning with the early days of rocketry and continuing into the present age of Mars rovers and commercial space enterprises, space exploration has developed from a scientific endeavor into a global mission that determines our destiny. In the year 2025, the competition to explore beyond Earth is not just about reaching other planets; it is also about enhancing life on Earth, imparting inspiration to future generations, and ensuring the continued existence of humanity in the long run. The influence of space exploration goes well beyond the launch of rockets; it has an effect on education, technology, the economy, and even our collective sense of purpose.

1. The Beginning of a New Era in Space Exploration

There has been a resurgence in the field of space exploration since the turn of the 21st century. Today’s missions are driven by a mix of public and private partnership, which means that they are no longer limited to the purview of government entities. Companies like as SpaceX, Blue Origin, and Rocket Lab are forming partnerships with the National Aeronautics and Space Administration (NASA) and other international organizations in order to broaden access to space. This new period places an emphasis not just on exploration but also on sustainability, which includes the creation of reusable rockets, space habitats, and other technologies that will make interplanetary travel a possibility for future generations.

2. Motivating the Next Generation of Creative Thinkers and Doers

There aren’t many things better than the wonders of space to pique one’s interest and imagination. All missions, from the exploration of the moon to the discoveries made by the James Webb Space Telescope, provide young scientists, engineers, and explorers with the inspiration they need to pursue their aspirations. In order to encourage students to pursue jobs in STEM fields (science, technology, engineering, and mathematics), educational initiatives that are inspired by space research are being implemented. For today’s young people, space is no more a far-off dream; rather, it is a real frontier that they might strive to attain.

3. The Development of New Technologies Resulting from the study of space

Numerous technologies that we use on a daily basis were initially developed for the purpose of space exploration. As a result of space-driven innovation, life on Earth has been changed in a variety of ways, including satellite navigation and wireless communication, new materials, and medical imaging. In the year 2025, advancements in robots, artificial intelligence, and renewable energy continue to develop as a result of space research. This demonstrates that investments in exploration directly contribute to success in a wide variety of businesses.

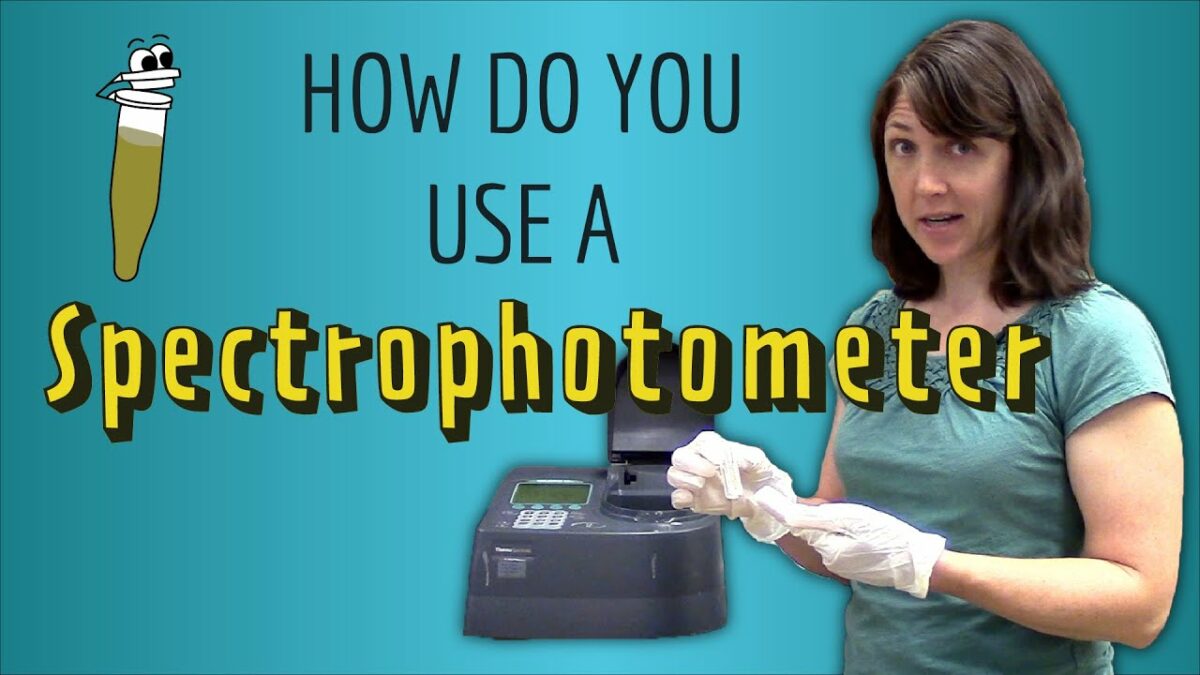

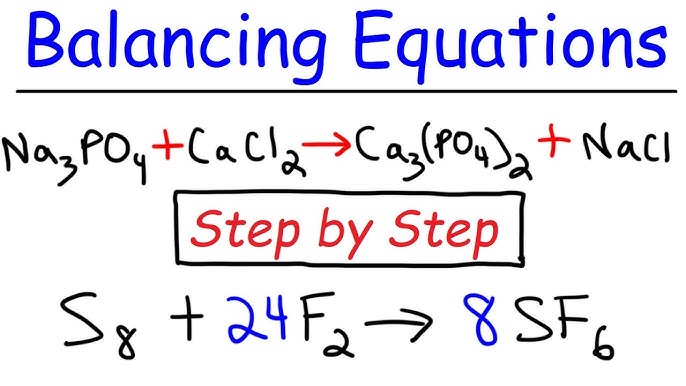

4. Space as a Laboratory for the Exploration of Scientific Knowledge

It is difficult to recreate the conditions that can be found in space on Earth. Because of the microgravity environment that exists on board the International Space Station (ISS), scientists are able to investigate biological, chemical, and physical processes in ways that were not before possible. As a result of these trials, substantial progress has been made in the fields of medicine, agriculture, and materials research. Examples include the cultivation of human tissue in space, which may soon speed up the process of organ regeneration and illness research. Additionally, the study of plants in orbit is assisting us in gaining a better understanding of how to maintain life beyond Earth.

5. Constructing a Future That Is Sustainable Beyond the Earth

There is a strong connection between sustainability and the future of space exploration. The development of closed-loop life support systems that recycle air, water, and waste is currently being undertaken by researchers. These technologies have the potential to enhance environmental management processes on Earth. It is possible that in the future, the demand for extraction from Earth may be reduced as a result of efforts to mine asteroids for minerals and use lunar resources for building. Learning how to live in space in a way that is sustainable provides us with significant insights into the conservation of the limited resources that our planet has.

6. The Function of Private Businesses in the Operations of Commercial Space Travel

Space exploration has been turned from a prestigious government undertaking into a rapidly expanding commercial business thanks to the efforts of private entrepreneurs. Several companies, like SpaceX, Virgin Galactic, and Axiom Space, are leading the way in providing inexpensive access to orbit, which enables private people, academics, and even artists to have the opportunity to experience space for themselves. As a result of this commercialization, competition is increasing, prices are decreasing, and innovation is advancing. This ensures that future generations will inherit a space economy that is both lively and inclusive.

7. Mars and Beyond: The Next Frontier in Traveling

The notion of traveling to Mars is no longer a science fiction fantasy; rather, it is a goal that can be accomplished within the next several decades. Artemis missions are being conducted by NASA in order to prepare humans for long space travel. Meanwhile, robotic missions are continuing to investigate the surface of Mars in search of indications of life and resources. The establishment of a permanent presence on Mars would not only be a historic accomplishment for humanity, but it would also teach us how to adapt, endure, and flourish in new situations. These are abilities that are crucial for the survival of our species.

8. How International Collaboration Is Improving Space Development

It is now widely acknowledged that space exploration is a potent symbol of worldwide solidarity. Projects that involve several countries working together to achieve a common objective, such as the International Space Station (ISS) and the forthcoming Lunar Gateway, are examples of what mankind is capable of doing. In the year 2025, new alliances between the United States of America, Europe, Asia, and rising space states are supporting the interchange of information, the sharing of resources, and the exploration of space in a peaceful manner. The worldwide collaboration that is taking place helps to guarantee that space will continue to be a realm for common progress rather than competition.

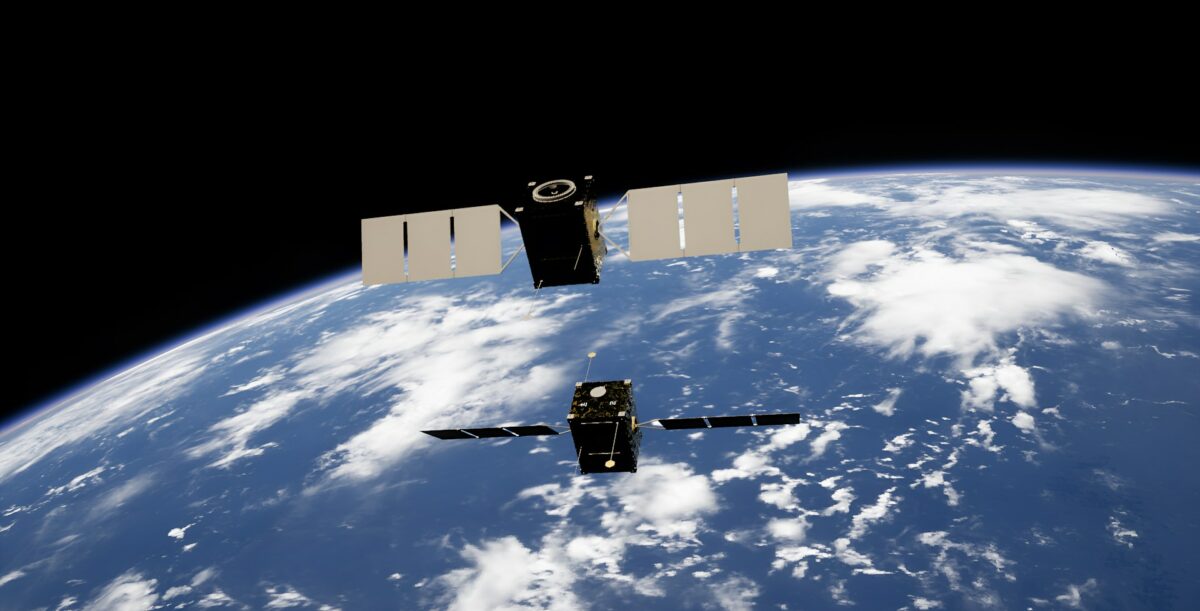

9. Observation from space with the purpose of safeguarding the planet

Satellites are an essential component in the process of monitoring the climate, weather patterns, and natural catastrophes that occur on Earth. For the purpose of providing early warnings for hurricanes, wildfires, and environmental deterioration, space-based observation systems use satellites. To put it simply, these technologies make it easier for governments and organizations to react to emergencies, which ultimately results in the preservation of lives and ecosystems. Continued investments in satellite infrastructure will ultimately result in a society that is both safer and more informed for future generations.

10. The search for life beyond the surface of the earth

The search for answers to the question of whether or not we are the only inhabitants of the universe is one of the most significant reasons for the exploration of spacespace. Missions to Mars and Europa, as well as surveys of exoplanets, are looking for signs of life, whether it is from the past or the present. In the event that even microorganisms were shown to possess life, it would fundamentally alter our view of biology, philosophy, and existence itself. Not only does this search offer a scientific challenge for future generations, but it also reflects a profoundly human endeavor to establish a link with the universe.

11. The Space Industry’s Contribution to the Growth of the Economy

As a result of the fast expansion of the global space economy, it is anticipated that it will surpass one trillion dollars in the future decades. Due to this expansion, new employment prospects, industries, and educational chances are created. Certain industries, such as aeronautical engineering, data analytics, and satellite technology, are becoming more important contributors to the economy of their respective nations. It is expected that employment in the space industry will be just as prevalent and important for future generations as they are for those working in technology or finance now.

12. The Ethical and Responsible Implications of Expanding Spacecapacity

As our exploration of space continues to expand, questions of morality and responsibility are becoming an increasingly important concern. The mining of alien resources and the polluting of other planets are two of the most pressing issues that we face today. To what extent do governments or companies have rights that extend beyond the borders of Earth? The establishment of unambiguous international regulations and procedures that are environmentally responsible will guarantee that exploration is beneficial to all of mankind and will protect the sacredness of the cosmos for children and grandchildren to come.

13. Motivating a Global Perspective and a Sense of Unity

It is possible that the most valuable gift that space travel bestows is perspective. The experience of seeing Earth from orbit, as astronauts tell it, alters the way in which we see both our planet and one another. This serves as a reminder that we all share a single frail home that is suspended in the whole of space. This viewpoint will continue to give rise to feelings of compassion and collaboration among future generations, as well as a sense of collective duty to safeguard not just our planet but also the cosmos that we are only starting to investigate.

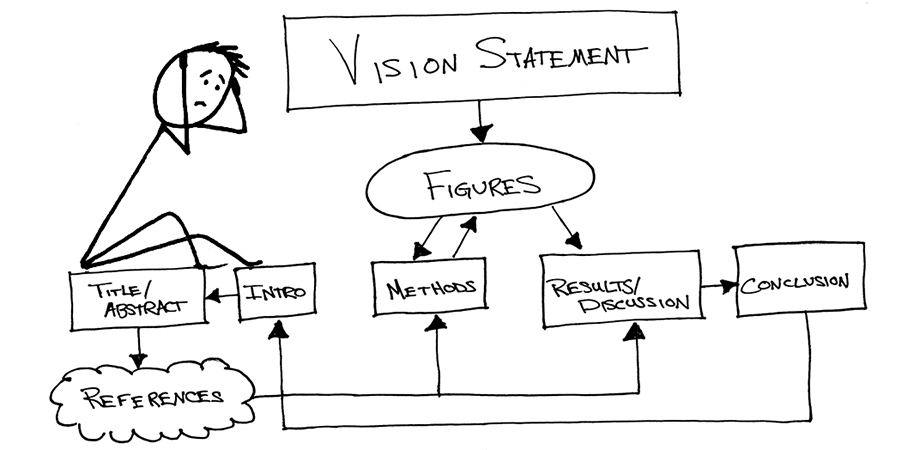

14. A legacy that was written among the stars

There is more to space travel than simply rockets and planets; it is also about leaving a legacy. Every mission, satellite, and discovery makes a contribution to laying a foundation upon which other generations will construct their own institutions. It is not just our perception of the cosmos that is shaped by the technologies, knowledge, and inspiration that are produced from space study, but also our destiny as a species. In the same way that we are continuing to aim for the stars, we are also striving for a brighter future—one that will be characterized by innovation, unity, and the relentless human need to experiment with the unknown.