Web automation is the process of using scripts to control a web browser and perform tasks that would otherwise require human intervention. Selenium is a widely-used tool for web automation that allows you to write scripts to control browsers like Chrome, Firefox, and Safari programmatically. This comprehensive guide will cover everything from setting up Selenium to performing complex web automation tasks.

Table of Contents

- Introduction to Selenium

- Setting Up Your Environment

- Understanding Selenium Components

- Basic Operations with Selenium

- Advanced Web Interaction

- Handling Alerts, Frames, and Windows

- Working with Web Forms

- Taking Screenshots

- Handling JavaScript and Dynamic Content

- Managing Cookies and Sessions

- Running Tests in Headless Mode

- Integrating Selenium with Other Tools

- Debugging and Troubleshooting

- Best Practices for Selenium Automation

- Legal and Ethical Considerations

- Conclusion

1. Introduction to Selenium

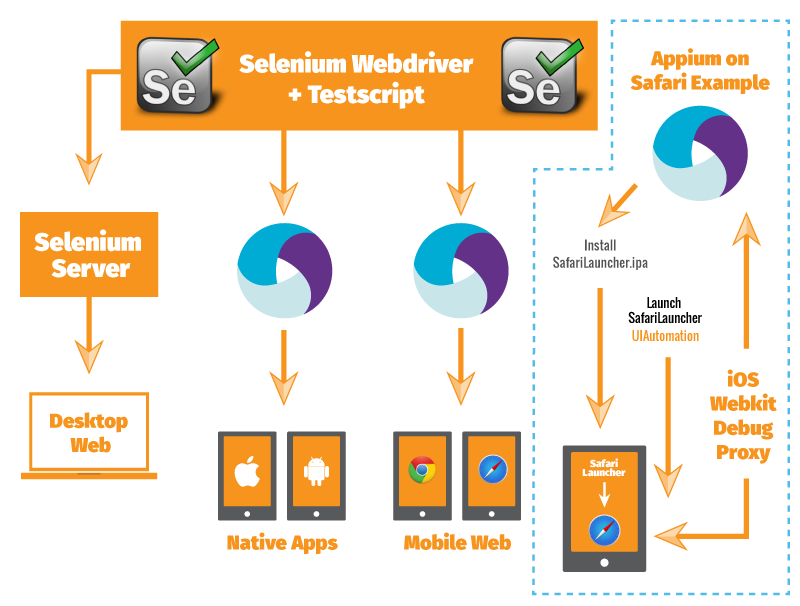

Selenium is an open-source tool for automating web browsers. It supports multiple browsers and operating systems and can be used for testing web applications, scraping web data, and performing repetitive tasks. Selenium provides a suite of tools, including:

- Selenium WebDriver: A browser automation tool that interacts directly with the browser.

- Selenium IDE: A browser extension for recording and playing back user interactions with the browser.

- Selenium Grid: A tool for running tests across multiple machines and browsers simultaneously.

Key Features

- Cross-Browser Testing: Supports major browsers like Chrome, Firefox, Safari, and Edge.

- Language Support: Compatible with multiple programming languages including Python, Java, C#, and JavaScript.

- Integration: Can be integrated with various testing frameworks and tools such as JUnit, TestNG, and Jenkins.

2. Setting Up Your Environment

Installing Python

Ensure Python is installed on your system. Download it from the official Python website.

Installing Selenium

Install the Selenium library using pip:

bash

pip install selenium

Installing WebDriver

Selenium requires a WebDriver for browser automation. Download the appropriate WebDriver for your browser:

- Chrome: ChromeDriver

- Firefox: GeckoDriver

- Edge: EdgeDriver

- Safari: Safari’s WebDriver is included with macOS and can be enabled from Safari’s Develop menu.

Setting Up WebDriver

Place the WebDriver executable in a directory included in your system’s PATH, or specify its location in your script.

3. Understanding Selenium Components

Selenium WebDriver

Selenium WebDriver provides a programming interface to control web browsers. It interacts with the browser by simulating user actions like clicking buttons and filling out forms.

Selenium IDE

Selenium IDE is a browser extension that allows you to record and playback user interactions with a web page. It’s useful for creating test cases without writing code.

Selenium Grid

Selenium Grid allows you to run tests across multiple machines and browsers simultaneously. It helps in scaling test execution and parallelizing tests.

4. Basic Operations with Selenium

Launching a Browser

To launch a browser, create a WebDriver instance:

python

from selenium import webdriver

driver = webdriver.Chrome() # or webdriver.Firefox(), webdriver.Edge(), etc.

driver.get(‘https://example.com’)

Locating Elements

You can locate elements using various methods:

- By ID:

python

element = driver.find_element_by_id('element_id')

- By Name:

python

element = driver.find_element_by_name('element_name')

- By XPath:

python

element = driver.find_element_by_xpath('//tag[@attribute="value"]')

- By CSS Selector:

python

element = driver.find_element_by_css_selector('css_selector')

Performing Actions

You can perform actions like clicking, typing, and submitting forms:

- Clicking a Button:

python

button = driver.find_element_by_id('submit_button')

button.click()

- Typing Text:

python

text_field = driver.find_element_by_name('username')

text_field.send_keys('my_username')

- Submitting a Form:

python

form = driver.find_element_by_id('login_form')

form.submit()

Closing the Browser

To close the browser, use:

python

driver.quit()

5. Advanced Web Interaction

Handling Dropdowns

Select options from dropdowns using the Select class:

python

from selenium.webdriver.support.ui import Select

dropdown = Select(driver.find_element_by_id(‘dropdown’))

dropdown.select_by_visible_text(‘Option Text’)

Handling Multiple Windows

Switch between multiple browser windows:

python

# Open a new window

driver.execute_script('window.open()')driver.switch_to.window(driver.window_handles[1])

Handling Tabs

Handle multiple browser tabs similarly to windows:

python

driver.execute_script('window.open("https://example.com", "_blank")')

driver.switch_to.window(driver.window_handles[1])

6. Handling Alerts, Frames, and Windows

Handling Alerts

Accept or dismiss JavaScript alerts:

python

# Switch to alert

alert = driver.switch_to.alertalert.accept()

alert.dismiss()

Handling Frames

Switch to an iframe or frame:

python

driver.switch_to.frame('frame_name_or_id')

Switching Back to Default Content

Switch back to the main content:

python

driver.switch_to.default_content()

7. Working with Web Forms

Filling Out Forms

Fill out forms by locating input fields and sending keys:

python

driver.find_element_by_name('username').send_keys('my_username')

driver.find_element_by_name('password').send_keys('my_password')

Submitting Forms

Submit forms by finding the submit button and clicking it:

python

driver.find_element_by_name('submit').click()

8. Taking Screenshots

Capturing Screenshots

Take a screenshot of the current window:

python

driver.save_screenshot('screenshot.png')

9. Handling JavaScript and Dynamic Content

Executing JavaScript

Execute JavaScript code within the context of the current page:

python

result = driver.execute_script('return document.title')

print(result)

Waiting for Elements

Handle dynamic content by waiting for elements to appear:

python

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as ECEC.presence_of_element_located((By.ID, ‘dynamic_element’))

)

10. Managing Cookies and Sessions

Adding Cookies

Add cookies to the browser session:

python

driver.add_cookie({'name': 'cookie_name', 'value': 'cookie_value'})

Getting Cookies

Retrieve cookies from the browser:

python

cookies = driver.get_cookies()

print(cookies)

Deleting Cookies

Delete specific or all cookies:

python

driver.delete_cookie('cookie_name')

driver.delete_all_cookies()

11. Running Tests in Headless Mode

Running in Headless Mode

Headless mode allows running browsers without a graphical user interface, useful for automated tests:

python

from selenium.webdriver.chrome.options import Options

chrome_options = Options()

chrome_options.add_argument(‘–headless’)

driver = webdriver.Chrome(options=chrome_options)

Headless Mode for Firefox

Use headless mode with Firefox:

python

from selenium.webdriver.firefox.options import Options

firefox_options = Options()

firefox_options.headless = True

driver = webdriver.Firefox(options=firefox_options)

12. Integrating Selenium with Other Tools

Integrating with pytest

Use pytest for writing and running tests:

- Install pytest:

bash

pip install pytest

- Write a test:

python

def test_example():

driver = webdriver.Chrome()

driver.get('https://example.com')

assert driver.title == 'Example Domain'

driver.quit()

- Run tests:

bash

pytest

Integrating with Jenkins

Automate test execution using Jenkins:

- Install Jenkins: Follow the Jenkins installation guide.

- Create a Jenkins Job: Set up a job to execute your Selenium tests.

- Configure the Job: Add build steps to install dependencies and run your tests.

13. Debugging and Troubleshooting

Debugging Tests

Use debugging techniques to troubleshoot test failures:

- Add Logs: Use logging to record test progress and failures.

- Use

assertStatements: Ensure conditions are met and catch errors early. - Run Tests Manually: Run tests manually to understand their behavior.

Common Issues

- Element Not Found: Ensure the element locator is correct and the element is loaded.

- Timeout Errors: Increase wait times for elements or use explicit waits.

- Browser Crashes: Check WebDriver and browser versions for compatibility.

14. Best Practices for Selenium Automation

Write Maintainable Code

- Use Page Object Model: Encapsulate page interactions in classes.

- Follow Naming Conventions: Use descriptive names for methods and variables.

Implement Robust Waits

- Use Explicit Waits: Wait for specific conditions rather than hard-coded delays.

Handle Exceptions

- Catch Exceptions: Handle exceptions gracefully and provide meaningful error messages.

Keep Dependencies Updated

- Update WebDriver: Regularly update WebDriver to match browser versions.

- Update Selenium Library: Keep the Selenium library up-to-date to access new features and bug fixes.

15. Legal and Ethical Considerations

Respect Website Policies

- Check Terms of Service: Ensure that automation complies with the website’s terms of service.

- Use APIs: Prefer using official APIs if available instead of scraping.

Avoid Overloading Servers

- Implement Throttling: Limit the frequency of requests to avoid overwhelming servers.

Handle Personal Data Responsibly

- Ensure Privacy: Avoid collecting or mishandling personal or sensitive data.

16. Conclusion

Selenium is a powerful tool for web automation that supports a wide range of browsers and programming languages. By understanding its components and following best practices, you can automate repetitive tasks, perform testing, and extract data efficiently.

This guide has covered the essentials of setting up Selenium, performing basic and advanced web interactions, handling dynamic content, and integrating with other tools. Always ensure that your automation practices are legal and ethical, and use the technology responsibly to maximize its benefits.

With these skills, you are well-equipped to harness the full potential of Selenium for your web automation projects.